2025 Consumer Digital Government Adoption Index

Balancing AI and human elements in government modernization

Download the PDF version for the full report with visual representations of data.

The digital government customer experience continues to improve — and AI could make or break it

Our annual survey of residents is in — and the findings point to new risks as governments adopt AI solutions.

Our annual consumer research is divided into two main sections: the first is AI-focused, and the second section features data about the current state of digital government. With accelerated modernization top of mind, we studied consumers’ sentiments about digital government: user experience, payment preferences, and the introduction of AI into those spaces. The results provide insights into the human dimensions that must be addressed as part of digital government roadmaps.

AI puts digital government on a trust tightrope

Basic digital government services are now mainstream, offered by most government agencies and embraced by the majority of residents. And now artificial intelligence (AI) has opened the door to faster government modernization, with many IT and line-of-business leaders racing to implement it.

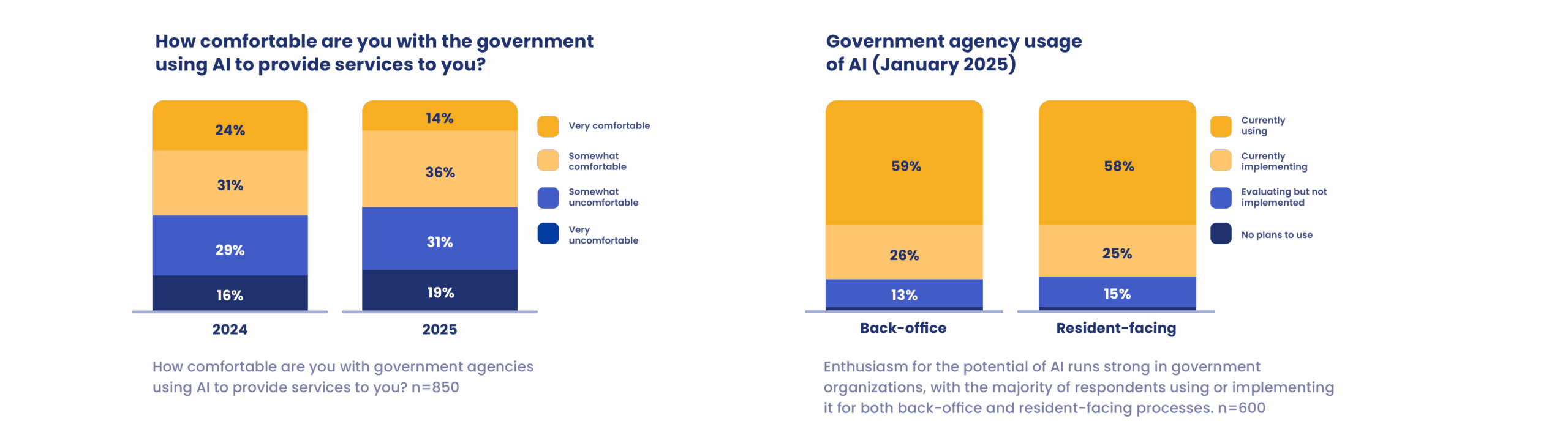

Government service delivery is likely to look completely different in just a few years — a proactive experience with more automation, personalization, and efficiency. AI holds so much promise that it came as no surprise that artificial intelligence and machine learning landed the #2 spot on NASCIO’s State CIO Top Ten Priority list for 2025. And when we surveyed government leaders earlier this year, over half said they’re already using AI for back-office and resident-facing processes.

Although AI can improve efficiency and deliver better digital experiences for residents, leaders must address human factors so that resident adoption doesn’t fall behind. Leaders should not underestimate the growing pains, genuine concerns, and risks to resident trust that accompany AI-driven modernization.

AI hype has outpaced consumer adoption

It’s not often that technology grabs mainstream attention like AI has. And while private sector brands and government agencies are rushing to work AI into their tech solutions and digital services, consumers remain wary. There remains significant consumer distrust in AI, and concern about government use outpaces private-sector use for a substantial portion of the public.

Widespread consumer adoption of AI is still emerging. About one-third of consumers report they have never used AI for work or personal tasks. And less than half of respondents use AI more than a couple of times a year.

Research from Edelman found similar results: Overall, 55% of respondents use generative AI; however, use tipped into majority numbers only among Gen Z and Millennials. And at work, AI use is still limited to less than 40% of the overall workforce. For most organizations, fewer than 60% of workers who have access to generative AI actually use it on a daily basis, according to Deloitte.

Government AI deployments are moving faster than resident comfort

For consumers, AI sparks conflicting emotions: curiosity, excitement, skepticism, and concern. Even as most government agencies pursue AI solutions, consumer comfort in the use of AI by government technology hasn’t budged compared to last year.

In fact, some age groups are even less comfortable than they were one year ago. Statistically significant drops in net comfort emerged for our Gen Z and Gen X respondent cohorts, while Millennial and Boomer respondent comfort remained steady within our study’s margin of error.

Consumers are prickly about AI access to personal data, even as they recognize AI’s potential to improve service delivery

Consumers expect government use of AI to be conscientious — and not to get too personal. People recognize that AI can improve government operations, but applications of AI that put personal data, security, or privacy at risk raise concerns. Similarly, PEW research found that keeping personal information private is a big issue for consumers: 53% said that AI hurts more than it helps.

Residents are more comfortable with specific uses than with the overall concept of AI in government.

The generalized unease may stem from limited knowledge of how AI tools may be applied to government use cases. Most respondents said they’d be comfortable with things like AI-powered search or assistance finding specific government policies.

Also of note — our study found that using AI for cybersecurity purposes in government is one of the areas respondents were more skeptical about. Agency leaders should ensure that their disclosures about AI use include details about the specific usage and its benefits, as well as the data protections in place.

Most respondents also noted concerns with the elimination of public sector jobs (72%) and environmental harm (60%). These worries are not new: in our 2024 consumer survey, respondents also expressed concerns about the human cost of AI, like job losses.

Unsurprisingly, enthusiastic digital users are more comfortable with AI in government services. Those who use AI at least once a week are more than twice as likely to report comfort with government use than those who don’t. We expect that as more people use AI tools, their understanding of its benefits will increase, leading to more acceptance of government use.

Trust hangs in the balance

Deploying AI in public-facing services introduces new levels of scrutiny, and any misstep (technical or ethical) can have lasting consequences on credibility.

Consumer trust in government was low even before AI reached its current prominence. The 2025 Edelman Trust Barometer found that only 54% of people trust government institutions to “do what is right,” trailing behind trust in businesses (65%) and brands they personally use (80%). While overall trust in business has climbed over the past few years, trust in government has remained flat.

This poses a clear challenge for public agencies deploying AI: Government leaders have to proactively manage resident trust alongside the technical aspects of AI deployment.

Residents expect disclosure from the get-go.

Although government leaders are understandably focused on the technical aspects of AI, the human factors are just as critical to get right.

79% of our respondents said state and local agencies should be required to disclose when they use AI to provide services to residents.

For AI tools to gain public acceptance, transparent communication, clearly defined human oversight, and strong guardrails must be built in from day one.

Residents are AI-curious, but still skeptical.

A thoughtful, honest approach to communications will help build long-term public trust:

- Disclose, disclose, disclose: Proactively share your plans for AI. Provide educational content, make a general roadmap available to the public, and highlight on-staff experts. And monitor laws in your area — some jurisdictions already require disclosure.

- Create an advisory council: Schedule regular trainings for staff and meetings with peer groups, local tech leaders, academic institutions, and ethics experts — share the knowledge (public meetings, round tables, or social media are a few options).

- Seek feedback early and often: Don’t wait until an AI solution is live to get feedback. Give residents a chance to voice their opinions, test pilot programs, and ask questions.

- Pilot small programs first and communicate results: Make incremental moves — track and publish results, internally and to the public.

- Address security and compliance concerns clearly and immediately: Explain clearly how data is protected, stored, and governed — and get that explanation front-and-center for residents.

- Explain the benefit of specific AI deployments as you release them: This is the fun one! Tie each deployment to a real-world outcome. For example:

- “We’re adding a chatbot to reduce call center wait times.”

- “We’re analyzing traffic patterns to ease rush hour congestion.”

- “We’re using predictive maintenance to keep water systems running smoothly.”

Digital government still has some growing up to do

Most government agencies now offer at least a few basic online services, and consumers are naturally gravitating to them. Most of our respondents (73%) said they pay their government bills online, and 58% of those who haven’t paid online plan to do so in the future.

When it comes to the quality of the payment experience, the majority of consumers rate government payment processes about the same or even easier than private sector transactions. The transactional nature of these interactions means that a simple but functional experience is enough to get the job done, at least for savvy online users.

But there is still a lot of room for improvement: One in five respondents reported that the process is slower and the overall experience is not as easy as in the private sector. And when it comes to features that are now commonplace in private-sector offerings, consumer expectations are low.

Even with resident expectations at a low benchmark, leaders should strive to improve the overall experience. Intuitive workflows, plain-language help text, and clear instructions go a long way towards driving further digital adoption — and reduce support inquiries from confused users.

Tried-and-true payment options remain popular

Year after year, consumers have cited payment options as one of the most important parts of a digital payment experience. Most people still prefer to use credit cards, debit cards, or ACH when they pay a government bill. After those core options are available to consumers, consider adding mobile payment options (like Apple Pay and Google Pay), peer-to-peer (e.g., Venmo or Zelle), and maybe crypto.

Prove that new technology can serve the public interest

Transparency should be a core pillar of your AI and modernization roadmap. Getting it right has the potential to do more than avoid damage to trust — there is an opportunity to strengthen trust and engagement. Human factors matter as much as technical ones. Proactive communication, visible guardrails, and responsible governance help make AI feel less risky and more like a helpful tool. Disclose your AI use and share your digital roadmap with residents, listen to their feedback, and build a track record of trust alongside modernization.